專欄介紹:《香儂說》為香儂科技打造的一款以機器學習與自然語言處理為專題的訪談節目。由斯坦福大學,麻省理工學院, 卡耐基梅隆大學,劍橋大學等知名大學計算機系博士生組成的“香儂智囊”撰寫問題,採訪頂尖科研機構(斯坦福大學,麻省理工學院,卡耐基梅隆大學,谷歌,DeepMind,微軟研究院,OpenAI 等)中人工智慧與自然語言處理領域的學術大牛, 以及在博士期間就做出開創性工作而直接進入頂級名校任教職的學術新星,分享他們廣為人知的工作背後的靈感以及對相關領域大方向的把控。

本期採訪嘉賓是 Facebook 人工智慧研究院(FAIR)首席科學家佐治亞理工學院教授 Devi Parikh。隨後我們計劃陸續推出 Eduard Hovy (卡耐基梅隆大學),Anna Korhonen (劍橋大學),Andrew Ng (斯坦福大學),Ilya Sukskever (OpenAI),William Yang Wang (加州大學聖芭芭拉分校),Jason Weston (Facebook 人工智慧研究院),Steve Young (劍橋大學) 等人的訪談,敬請期待。

Facebook 人工智慧研究院(FAIR)首席科學家、佐治亞理工互動計算學院教授、計算機視覺實驗室主任 Devi Parikh 是 2017 年 IJCAI 計算機和思想獎獲得者(IJCAI 兩個最重要的獎項之一,被譽為國際人工智慧領域的“菲爾茲獎”),並位列福布斯 2017 年“20 位引領 AI 研究的女性”榜單。她主要從事計算機視覺和樣式識別研究,具體研究領域包括計算機視覺、語言與視覺、通識推理、人工智慧、人機合作、語境推理以及樣式識別。

2008 年到現在,Devi Parikh 先後在計算機視覺三大頂級會議(ICCV、CVPR、ECCV)發表多篇論文。她所主持開發的視覺問題回答資料集(Visual Question Anwering)受到了廣泛的關註,併在 CVPR 2016 上組織了 VQA 挑戰賽和 VQA 研討會,極大地推動了機器智慧理解圖片這一問題的解決,並因此獲得了 2016 年美國國家科學基金會的“傑出青年教授獎(NSF CAREER Award)。她最近的研究集中在視覺、自然語言處理和推理的交叉領域,希望透過人和機器的互動來構造一個更加智慧的系統。

香儂科技:您和您的團隊開發的視覺問答資料集(VQA, Visual Question Answering Dataset, Antol et al. ICCV2015; Agrawal et al. IJCV 2017)極大地推動了該領域的發展。這一資料集囊括了包括計算機視覺,自然語言處理,常識推理等多個領域。您如何評估 VQA 資料集到目前產生的影響?是否實現了您開發此資料集的初衷?您期望未來幾年 VQA 資料集(及其進階版)對該領域產生何種影響?

Devi and Aishwarya:

VQA 資料集影響:

我們在 VQA 上的工作釋出後短期內受到了廣泛的關註 – 被超過 800 篇論文所取用((Antol et al. ICCV 2015; Agrawal et al. IJCV 2017),還在 15 年 ICCV 上“對話中的物體認知”研討會中獲得最佳海報獎(Best Poster Award)。

為了評估 VQA 的進展,我們用 VQA 第一版為資料集,在 2016 年 IEEE 國際計算機視覺與樣式識別會議(CVPR-16,IEEE Conference on Computer Vision and Pattern Recognition 2016)上組織了第一次 VQA 挑戰賽和第一次 VQA 研討會(Antol etal. ICCV 2015; Agrawal et al. IJCV 2017)。 挑戰和研討會都很受歡迎:來自學術界和工業界的 8 個國家的大約 30 個團隊參與了這一挑戰。在此次挑戰中,VQA 的準確率從 58.5% 提高到 67%,提升了 8.5%。

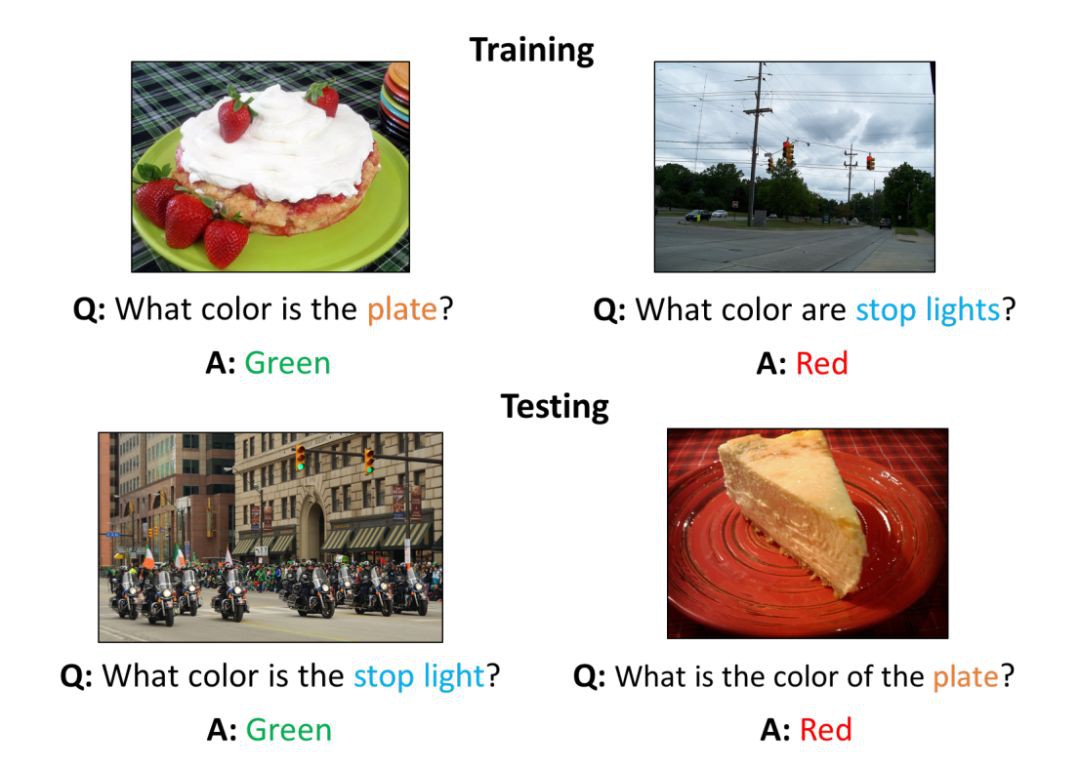

▲ 圖1. VQA資料集中的問答樣例

VQA v1 資料集和 VQA 挑戰賽不僅促進了原有解決方案的改進,更催生了一批新的模型和資料集。例如,使用空間註意力來聚焦與問題相關的影象區域的模型(Stacked Attention Networks, Yang et al., CVPR16);以分層的方式共同推理影象和問題應該註意何處的註意力神經網路(Hierarchical Question Image Co-attention, Lu et al., NIPS16);可以動態組合模組的模型,其中每個模組專門用於顏色分類等子任務(Neural Module Networks, Andreas et al., CVPR16);使用雙線性池化等運算融合視覺和語言特徵,從而提取更豐富的表徵的模型(Multimodal Compact Bilinear Pooling,Fukui et al.,EMNLP16)。

此外,VQA 也催生了許多新的資料集,包括側重於視覺推理和語言組合性的模型及相關資料集(CLEVR: A Diagnostic Dataset for Compositional Language and Elementary Visual Reasoning, Johnson et al., CVPR17);對於 VQA 第一版資料集的重新切分,使其可以用來研究語言的組合性問題 C-VQA(A Compositional Split of the VQA v1.0 Dataset, Agrawal et al., ArXiv17);還有需要模型剋服先驗言語知識的影響,必須要觀察影象才能回答問題的 VQA 資料集(Agrawal et al., CVPR18)。

簡而言之,我們在 VQA 上的工作已經在人工智慧中建立了一個新的多學科子領域。事實上,在這個資料集釋出不久,在一些重要的 AI 會議上,當你提交論文並選擇相關的子主題時,VQA 已成為一個新增選項。

是否實現了 VQA 開發的初衷:

儘管 VQA 社群在提高 VQA 模型的效能方面取得了巨大進步(VQA v2 資料集上的預測準確率在 3 年內從 54% 提高到 72%),但我們距離完全解決 VQA 任務還有很長的路要走。現有的 VQA 模型仍然缺乏很多必要的能力,比如:視覺落地 (visual grounding),組合性(compositionality),常識推理等,而這些能力是解決 VQA 的核心。

當我們開發資料集時,我們認為模型的泛化應該是一個很大挑戰,因為你很難期望模型在訓練集上訓練,就能很好地推廣到測試集。因為在測試時,模型可能會遇到關於影象的任何開放式問題,而很有可能在訓練期間沒有遇到過類似的問題。我們期望研究人員能嘗試利用外部知識來處理此類問題,但是在這方面的工作現階段還很少。不過我們已經看到了一些在該方面的初步進展(e.g., Narasimhan et al. ECCV 2018, Wang et al. PAMI 2017),希望將來會看到更多。

期望 VQA 資料集未來的影響:

我們希望 VQA 資料集對該領域能產生直接和間接的影響。直接的影響是指,我們期望在未來幾年內能湧現更多新穎的模型或技術,以進一步改進 VQA 第一版和 VQA 第二版資料集上的預測準確率。而間接的影響是指,我們希望更多全新的資料集和新任務能被開發出來,如 CLEVR(Johnson等人, CVPR17),Compositional VQA(Agrawal等人,ArXiv17),需要剋服先驗語言知識的 VQA (Agrawal et al.,CVPR18),基於影象的對話(Das et al.,CVPR17),需要具身認知的問答(Embodied Question Answering, Das et al.,CVPR18)。它們或直接構建在 VQA 資料集之上,或是為解決現有 VQA 系統的侷限性所構造。因此,我們期待 VQA 資料集(及其變體)能進一步將現有 AI 系統的能力提升,構造可以理解語言影象,能夠生成自然語言,執行動作併進行推理的系統。

香儂科技:最近,您的團隊釋出了 VQA 第二版(Goyal et al. CVPR 2017),其中包含對應同一問題有著不同答案的相似影象對。這樣的資料集更具挑戰性。通常,建立更具挑戰性的資料集會迫使模型編碼更多有用的資訊。但是,構建這樣的資料集會耗費大量人力。是否可以用自動的方式來生成幹擾性或對抗性的示例,從而將模型的預測能力提升到一個新的水平呢?

▲ 圖2. VQA 2.0資料集中的圖片及問題示例,每個問題對應著兩個相似、但卻需要不同回答的圖片。圖片來自論文Goyal et al. CVPR 2017

Devi, Yash, and Jiasen:構建大規模資料集確實是勞動密集型的工作。目前有一些基於現有標註自動生成新的問答對的工作。例如,Mahendru 等人 EMNLP 2017 使用基於模板的方法,根據 VQA 訓練集的問題前提,生成關於日常生活中的基本概念的新問答對。這一研究發現,將這些簡單的新問答對新增到 VQA 訓練資料可以提高模型的效能,尤其是在處理語言組合性(compositionality)的問題上。

在資料增強這一問題上,生成與影象相關的問題也是一個很重要的課題。與上述基於模板生成問題的方法不同,這種方法生成的問題更自然。但是,這些模型還遠不成熟,且無法對生成問題進行回答。因此,為影象自動生成準確的問答對目前還是非常困難的。要解決這一問題,半監督學習和對抗性例子生成可能會提供一些比較好的思路。

值得註意的是,關於影象問題的早期資料集之一是 Mengye Ren 等人在 2015 年開發的 Toronto COCO-QA 資料集。他們使用自然語言處理工具自動將關於影象的標註轉換為問答對。雖然這樣的問答對通常會留下奇怪的人為痕跡,但是將一個任務的標註(在本例中為字幕)轉換為另一個相關任務的標註(在這種情況下是問答)是一個極好的方法。

香儂科技:除 VQA 任務外,您還開發了基於影象的對話資料集——Visual Dialog Dataset(Das et al., CVPR 2017, Spotlight)。在收集資料時,您在亞馬遜勞務眾包平臺(一個被廣泛使用的眾包式資料標註平臺)上配對了兩個參與者,給其中一個人展示一張圖片和圖的標題,另一個人只能看到圖的標題,任務要求只能看到標題的參與者向另一個能看到圖片的參與者提出有關圖片的問題,以更好地想象這個影象的場景。這個資料集為我們清晰地揭示了影象中哪些資訊人們認為更值得獲取。您是否認為對模型進行預訓練來猜測人們可能會問什麼問題,可以讓模型具備更像人類的註意力機制,從而提高其問答能力?

▲ 圖3. 基於影象的對話任務,聊天機器人需要就影象內容與一個人展開對話。樣例來自論文Das et al., CVPR 2017

Devi and Abhishek:在這些對話中,問題的提出存在一些規律:對話總是開始於談論最醒目的物件及其屬性(如人,動物,大型物體等),結束在關於環境的問題上(比如,“影象中還有什麼?”,“天氣怎麼樣?”等)。如果我們可以使模型學習以區分相似影象為目的來提出問題並提供答案,從而使提問者可以猜出影象,就可以生成更好的視覺對話模型。Das & Kottur et al., ICCV 2017 展示了一些相關的工作。

香儂科技:組合性是自然語言處理領域的一個經典問題。您和您的同事曾研究評估和改進 VQA 系統的組合性(Agrawal et al. 2017)。一個很有希望的方向是結合符號方法和深度學習方法(例,Lu et al. CVPR 2018, Spotlight)。您能談談為什麼神經網路普遍不能系統性地泛化,以及我們能如何解決這個問題嗎?

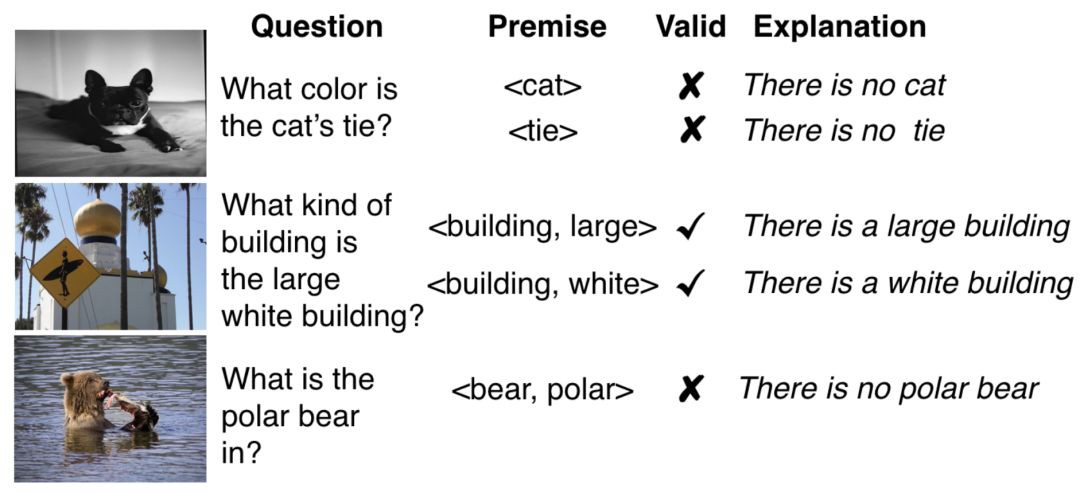

▲ 圖4. 組合性VQA資料集(C-VQA)的示例。測試集中詞語的組合是模型在訓練集中沒有學習過的,雖然這些組閤中的每一單個詞在訓練集中都出現過。圖片來源於Agrawal et al. 2017

Devi and Jiasen:我們認為產生這樣結果的一個原因是這些模型缺乏常識,如世界是如何運作的,什麼是可以預期的,什麼是不可預期的。這類知識是人類如何從例子中學習,或者說面對突發事件時依然可以做出合理決策的關鍵。當下的神經網路更接近樣式匹配演演算法,它們擅長從訓練資料集中提取出輸入與輸出之間複雜的相關性,但在某種程度上說,這也是它們可以做的全部了。將外部知識納入神經網路的方法現在仍然非常匱乏。

香儂科技:您的工作已經超越了視覺和語言的結合,擴充套件到了多樣式整合。在您最近發表的 Embodied Question Answering 論文中(Das et al. CVPR, 2018),您介紹了一項包括主動感知,語言理解,標的驅動導航,常識推理以及語言落地為行動的任務。這是一個非常有吸引力的方向,它更加現實,並且與機器人關係更加緊密。在這種背景下的一個挑戰是快速適應新環境。您認為在 3D 房間環境中訓練的模型(如您的那篇論文中的模型)會很快適應其他場景,如戶外環境嗎?我們是否必須在模型中專門建立元學習(meta-learning)能力才能實現快速適應?

▲ 在具身問答(Embodied QA)任務中,機器人透過探索周圍的3D環境來回答問題。為完成這項任務 ,機器人必須結合自然語言處理、視覺推理和標的導航的能力。圖片來自於Das et al. CVPR 2018

Devi and Abhishek:在目前的實體中,他們還不能推廣到戶外環境。這些系統學習到的東西與他們接受訓練時的影象和環境的特定分佈密切相關。因此,雖然對新的室內環境的一些泛化是可能的,但對於戶外環境,他們在訓練期間還沒有看到過足夠多的戶外環境示例。例如,在室內環境中,牆壁結構和深度給出了關於可行路徑和不可行路徑的線索。而在室外環境中,路錶面的情況(例如,是道路還是草坪)可能與系統能否在該路徑上通行更相關,而深度卻沒那麼相關了。

即使在室內的範圍內,從 3D 房間到更逼真的環境的泛化也是一個未完全解決的問題。元學習方法肯定有助於更好地推廣到新的任務和環境。我們還在考慮構建模組化的系統,將感知與導航功能分離,因此在新環境中只需要重新學習感知模組,然後將新的環境(例如更真實的環境)的視覺輸入對映到規劃模組更為熟悉的特徵空間。

香儂科技:您有一系列論文研究 VQA 任務中問題的前提(Ray et al. EMNLP 2016, Mahendru et al. 2017),並且您的研究發現,迫使 VQA 模型在訓練期間對問題前提是否成立進行判斷,可以提升模型在組合性(compositionality)問題上的泛化能力。目前 NLP 領域似乎有一個普遍的趨勢,就是用輔助任務來提高模型在主要任務上的效能。但並非每項輔助任務都一定會有幫助,您能說說我們要如何找到有用的輔助任務嗎?

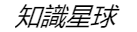

▲ 圖6. VQA問題中常常包含一些隱藏前提,會提示一部分影象資訊。因此Mahendru et al. 構造了“問題相關性預測與解釋”資料集(Question Relevance Prediction and Explanation, QRPE)。圖中例子展示了Mahendru et al. EMNLP 2017一文中“錯誤前提偵測”模型偵測到的一些前提不成立的問題

Devi and Viraj:在我們實驗室 Mahendru 等人 2017 年發表的論文中,作者的標的是透過推理問題的前提是否成立,來使 VQA 模型能夠更智慧地回答不相關或以前從未遇到的問題。我們當時有一個想法,認為用這樣的方式來擴充資料集可能幫助模型將物體及其屬性分離開,這正是組合性問題的實質,而後來經過實驗發現確實如此。

更廣義地來說,我們現在已經看到了很多這種跨任務遷移學習的例子。例如,圍繞問題回答,機器翻譯,標的導向的對話等多工展開的 decaNLP 挑戰。或者,將用於 RGB 三維重建,語意分割和深度估計(depth estimation)的模型一起訓練,構建一個強大的視覺系統,用於完成需要具身認知的任務(Embodied Agents, Das et al. 2018)。當然也包括那些首先在 ImageNet 上預訓練,然後在特定任務上微調這樣的被廣泛使用的方法。所有這些都表明,即使對於多個跨度很大的任務,多工下學習的表徵也可以非常有效地遷移。但不得不承認,發現有意義的輔助任務更像是一門藝術,而不是科學。

香儂科技:近年來,深度學習模型的可解釋性受到了很多關註。您也有幾篇關於解釋視覺問答模型的論文,比如理解模型在回答問題時會關註輸入的哪個部分,或是將模型註意力與人類註意力進行比較(Das et al. EMNLP 2016, Goyal et al. ICML 2016 Workshop on Visualization for Deep Learning, Best Student Paper)。您認為增強深度神經網路的可解釋性可以幫助我們開發更好的深度學習模型嗎?如果是這樣,是以什麼方式呢?

▲ 圖7. 透過尋找模型在回答問題時關註了輸入問題中哪部分欄位(高亮部分顯示了問題中的詞彙重要性的熱圖)來解釋模型預測的機制。比如上面問題中“whole”是對模型給出回答“no”最關鍵的詞語。圖片來源於論文Goyal et al. ICML 2016 Workshop on Visualization for Deep Learning

Devi and Abhishek:我們的 Grad-CAM 論文(Selvarajuet et al., ICCV 2017)中的一段話對這個問題給出了答案:

從廣義上講,透明度/可解釋性在人工智慧(AI)演化的三個不同階段都是有用的。首先,當 AI 明顯弱於人類並且尚不能可靠地大規模應用時(例如視覺問題回答),透明度和可解釋性的目的是識別出模型為什麼失敗,從而幫助研究人員將精力集中在最有前景的研究方向上;其次,當人工智慧與人類相當並且可以大規模使用時(例如,在足夠資料上訓練過的對特定類別進行影象分類的模型),研究可解釋性的目的是在使用者群體中建立對模型的信心。第三,當人工智慧顯著強於人類(例如國際象棋或圍棋)時,使模型可解釋的目的是機器教學,即讓機器來教人如何做出更好的決策。

可解釋性確實可以幫助我們改進深度神經網路模型。對此我們發現的一些初步證據如下:如果 VQA 模型被限制在人們認為與問題相關的影象區域內尋找答案,模型在測試時可以更好的落地並且更好地推廣到有不同“答案先驗機率分佈”的情況中(即 VQA-CP 資料集這樣的情況)。

可解釋性也常常可以揭示模型所學到的偏見。這樣做可以使系統設計人員使用更好的訓練資料或採取必要的措施來糾正這種偏見。我們的 Grad-CAM 論文(Selvaraju et al.,ICCV 2017)的第 6.3 節就報告了這樣一個實驗。這表明,可解釋性可以幫助檢測和消除資料集中的偏見,這不僅對於泛化很重要,而且隨著越來越多的演演算法被應用在實際社會問題上,可解釋性對於產生公平和符合道德規範的結果也很重要。

香儂科技:在過去,您做了很多有影響力的工作,併發表了許多被廣泛取用的論文。您可以和剛剛進入 NLP 領域的學生分享一些建議,告訴大家該如何培養關於研究課題的良好品味嗎?

Devi:我會取用我從 Jitendra Malik(加州大學伯克利分校電子工程與電腦科學教授)那裡聽到的建議。我們可以從兩個維度去考慮研究課題:重要性和可解決性。有些問題是可以解決的,但並不重要;有些問題很重要,但基於整個領域目前所處的位置,幾乎不可能取得任何進展。努力找出那些重要、而且你可以(部分)解決的問題。當然,說起來容易做起來難,除了這兩個因素之外也還有其他方面需要考慮。例如,我總是被好奇心驅使,研究自己覺得有趣的問題。但這可能是對於前面兩個因素很有用的一個一階近似。

參考文獻

[1]. Antol S, Agrawal A, Lu J, et al. VQA: Visual question answering[C]. Proceedings of the IEEE International Conference on Computer Vision. 2015: 2425-2433.

[2]. Yang Z, He X, Gao J, et al. Stacked attention networks for image question answering[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016: 21-29.

[3]. Lu J,Yang J, Batra D, et al. Hierarchical question-image co-attention for visual question answering[C]. Proceedings of the Advances In Neural Information Processing Systems. 2016: 289-297.

[4]. Andreas J, Rohrbach M, Darrell T, et al. Neural module networks[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016: 39-48.

[5]. Fukui A, Park D H, Yang D, et al. Multimodal compact bilinear pooling for visual question answering and visual grounding[J]. arXiv preprint arXiv:1606.01847,2016.

[6]. Johnson J, Hariharan B, van der Maaten L, et al. CLEVR: A diagnostic dataset for compositional language and elementary visual reasoning[C]. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2017: 1988-1997.

[7]. Vo M, Yumer E, Sunkavalli K, et al. Automatic Adaptation of Person Association for Multiview Tracking in Group Activities[J]. arXiv preprint arXiv:1805.08717, 2018.

[8]. Agrawal A, Kembhavi A, Batra D, et al. C-vqa: A compositional split of the visual question answering (vqa) v1.0 dataset. arXiv preprint arXiv: 1704.08243, 2017.

[9]. Agrawal A, Batra D, Parikh D, et al. Don’t just assume; look and answer: Overcoming priors for visual question answering[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018: 4971-4980.

[10]. Das A, Kottur S, Gupta K, et al. Visual dialog[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017: 1080–1089.

[11]. Das A, Datta S, Gkioxari G, et al. Embodied question answering[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2018.

[12]. Goyal Y, Khot T, Summers-Stay D, et al. Making the V in VQA matter: Elevating the role of image understanding in Visual Question Answering[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017, 1(2):3.

[13]. MahendruA, Prabhu V, Mohapatra A, et al. The Promise of Premise: Harnessing Question Premises in Visual Question Answering[C]. Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. 2017: 926-935.

[14]. Ren M, Kiros R, Zemel R. Image question answering: A visual semantic embedding model and a new dataset[J]. Proceedings of the Advances in Neural Information Processing Systems, 2015,1(2): 5.

[15]. Fang H S, Lu G, Fang X, et al. Weakly and Semi Supervised Human Body Part Parsing via Pose-Guided Knowledge Transfer[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018: 70-78.

[16]. Ray A, Christie G, Bansal M, et al. Question relevance in VQA: identifying non-visual and false-premise questions[J]. arXiv preprint arXiv: 1606.06622,2016.

[17]. Das A, Agrawal H, Zitnick L, et al. Human attention in visual question answering: Do humans and deep networks look at the same regions?[J]. Computer Vision and Image Understanding, 2017, 163: 90-100.

[18]. Goyal Y, Mohapatra A, Parikh D, et al. Towards transparent AI systems: Interpreting visual question answering models[J]. arXiv preprint arXiv:1608.08974, 2016.

[19]. Selvaraju R R, Cogswell M, Das A, et al. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization[C]. Proceedings of the International Conference on Computer Vision (ICCV). 2017: 618-626.

香儂招聘

香儂科技 (http://shannon.ai/) ,是一家深耕金融領域的人工智慧公司,旨在利用機器學習和人工智慧演演算法提取、整合、分析海量金融資訊,讓 AI 為金融各領域賦能。

香儂科技在 2017 年 12 月創立,獲紅杉中國基金獨家數千萬元融資。創始人之一李紀為是斯坦福大學計算機專業歷史上第一位僅用三年時間就獲得博士的人,入選福布斯中國 2018 “30 Under 30” 精英榜。在由劍橋大學研究員 Marek Rei 釋出的一項統計中,李紀為博士在最近三年世界所有人工智慧研究者中,以第一作者發表的頂級會議文章數量高居第一位。公司博士佔比超 30%,成員皆來自斯坦福、MIT、CMU、Princeton、北京大學、清華大學、人民大學、南開大學等國內外知名學府。

香儂科技現全面招募自然語言處理工程師,機器視覺工程師等演演算法工程師。如果你想全職或實習加入香儂研發團隊,請點選檢視香儂招聘貼

簡歷投遞郵箱:hr@shannonai.com

Tips:聽說在郵件標題中註明“PaperWeekly”,能大大提升面試邀約率

英文采訪稿

ShannonAI: The Visual Question Answering Dataset (Antol et al. ICCV 2015; Agrawal et al. IJCV 2017) you and your colleagues introduced has greatly pushed the field forward. It integrates various domains including computer vision, natural language processing, common sense reasoning, etc. How would you evaluate the impact of the VQA dataset so far? Have the original goals of introducing this dataset been realized? What further impact do you anticipate the VQA dataset (and its variants) to have on the field in the next few years?

Devi and Aishwarya: (1) Our work on VQA has witnessed tremendous interest in a short period of time (3 years) – over 800 citations of the papers (Antol et al.ICCV 2015; Agrawal et al. IJCV 2017), ~1000 downloads of the dataset, best poster award at the Workshop on Object Understanding for Interaction (ICCV15).

To benchmark progress in VQA, we organized the first VQA Challenge and the first VQA workshop at CVPR16 on the VQA v1 dataset (Antolet al. ICCV 2015; Agrawal et al. IJCV 2017). Both the challenge and the workshop were very well received. Approximately 30 teams from 8 countries across academia and industry participated in the challenge. During this challenge, the state-of-the-art in VQA improved by 8.5% from 58.5% to 67%.

In addition to the improvement in the state-of-the-art, the VQA v1 dataset and the first VQA Challenge led to the development of a variety of models and follow-up datasets proposed for this task. For example, we saw models using spatial attention to focus on image regions relevant to question (Stacked Attention Networks, Yang et al., CVPR16), we saw models that jointly reason about image and question attention, in a hierarchical fashion (Hierarchical Question Image Co-attention, Lu et al., NIPS16), models which dynamically compose modules, where each module is specialized for a subtask such as color classification (Neural Module Networks, Andreas et al., CVPR16), models that study how to fuse thevision and language features using operators such as bilinear pooling to extract rich representations(Multimodal Compact Bilinear Pooling, Fukui et al., EMNLP16), and we have seen datasets as well as models that focus on visual reasoning and compositionalityin language (CLEVR: A Diagnostic Dataset for Compositional Language and Elementary Visual Reasoning, Johnson et al., CVPR17), C-VQA: A Compositional Split of the Visual Question Answering (VQA) v1.0 Dataset (Agrawal et al., ArXiv17), Don’t Just Assume; Look and Answer: Overcoming Priors for Visual Question Answering (Agrawal et al., CVPR18).

In short, our work on VQA has resulted in creation of a new multidisciplinary sub-field in AI! In fact, at one of the major conferences (I think it was NIPS) — when you submit a paper and have to pick the relevant sub-topic — VQA is one of the options 🙂

(2) Although the VQA community has made tremendous progress towards improving the state-of-the-art (SOTA) performance on the VQA dataset (the SOTA on VQA v2 dataset improved from 54% to 72% in 3 years), we are still far from completely solving the VQA dataset and the VQA task in general. Existing VQA models still lack a lot of skills that are core to solving VQA — visual grounding, compositionality, common sense reasoning etc.

When we introduced the dataset, we believed that it would be very difficult for models to just train on the training set and generalize well enough to the test set. After all, at test time, the model might encounter any open-ended question about the image. It is quite plausible that it won’t have seen a similar question during training. We expected researchers to explore the use of external knowledge to deal with such cases. However, we have seen less work in that direction than we had anticipated and even hoped for. We have seen some good initial progress in this space (e.g., Narasimhan et al. ECCV 2018, Wang et al. PAMI 2017) and hopefully we’ll see more in the future.

(3) We expect the VQA dataset to have both direct and indirect impact on the field. Directly, we expect more novel models/ techniques to be developed in next few years in order to further improve the SOTA on the VQA v1 and VQA v2 datasets. Indirectly, we expect that more novel datasets and novel tasks such as CLEVR (Johnson et al., CVPR17), Compositional VQA (C-VQA)(Agrawal et al., ArXIv17), VQA under Changing Priors (VQA-CP) (Agrawal et al.,CVPR18), Visual Dialog (Das et al., CVPR17), Embodied Question Answering (Das et al., CVPR18) will be developed either by directly building on top of the VQA dataset or by being motivated by what skills existing VQA systems are lacking and developing datasets/ tasks that evaluate those skills. Thus, we expect the VQA dataset (and its variants) to further push the capabilities of the existing AI systems towards agents that can see, talk, act, and reason.

ShannonAI: Recently your team released VQA2.0 (Goyal et al. CVPR 2017), a balanced dataset that contains pairs of similar images that result in different answers to the same question. In general, creating more challenging datasets seems to force the models to encode more information that are useful. However, constructing such datasets can be pretty labor-intensive. Is there any automatic way to generate distractors/adversarial examples that may help push the models to their limits?

Devi, Yash, and Jiasen: Constructing a large-scale dataset is indeed labor-intensive. There are a few works which focus on automatically generating new question-answer pairs based on existing annotations. For example, Mahendruet al., EMNLP 2017 uses a template-based method to generate new question-answer pairs about elementary concepts from premises of questions in the VQA training set. They show that adding these simple question-answer pairs to VQA training data can improve performance on tasks requiring compositional reasoning.

Visual question generation which aims to generate questions related to the image is another topic connected to data augmentation. Different from the template-based method described above, the generated question is more natural. However, these models are far from perfect and answers to these questions still need to be crowdsourced. Hence, automatically generating accurate question-answer pairs for images is currently quite difficult and noisy. Semi-supervised learning and adversarial examples generation are certainly promising directions.

Note that one of the earlier datasets on questions about images was the Toronto COCO-QA Dataset dataset by Ren et al. in 2015. They automatically converted captions about images into question-answer pairs using NLP tools. While the question-answer pairs often have strange artifacts, it is a great way to convert annotations from one task (in this case captioning) into annotations for another related task (in this case question-answering).

ShannonAI: In addition to the VQA task, you also introduced the Visual Dialog Dataset (Das et al., CVPR 2017 Spotlight). When collecting the data, you paired two participants on Amazon Mechanical Turk and asked one of them to ask questions about an image to the other. This dataset offers us great insight into what naturally attracts human’s attention in an image and what information humans find worth requesting. Do you think pre-training the model to guess what questions people might ask could equip the model with more human-like attention mechanism, which would in turn enhance its question answering ability?

Devi and Abhishek: There is a somewhat regular pattern to the line of questioning in these dialogs — the conversation starts with humans talking about the most salient objects and their attributes (people, animals, large objects, etc.), and ends with questions about the surroundings(“what else is in the image?”, “how’s the weather?”, etc.).

And so if we were to equip models with this sort of a capability where they learn to ask questions and provide answers in an image-discriminative manner so that the questioner can guess the image, that could lead to better visual dialog models. Some work towards this is available in Das & Kotturet al., ICCV 2017.

ShannonAI: Compositionality has been a long-standing topic in NLP. You and your colleagues have focused on evaluating and improving the compositionality of the VQA systems (Agrawal et al. 2017). One promising direction is to combine symbolic approaches and deep learning approaches (e.g., Lu et al. CVPR 2018 Spotlight). Could you comment on why neural networks are generally bad at performing systematic generalization and how we could possibly tackle that?

Devi and Jiasen: We think one reason for this is that these models lack common sense — knowledge of how the world works, what is expected vs.unexpected, etc. Knowledge of this sort is key to how humans learn from a few examples, or are robust — i.e., can make reasonable decisions — even when faced with novel “out of distribution” instances. Neural networks, in their current incarnation, are closer to pattern matching algorithms that are very good at squeezing complex correlations out from the training dataset, but to some degree, that is all they can do well. Mechanisms to incorporate external knowledge into neural networks is still significantly lacking.

ShannonAI: Your work has gone beyond integrating vision and language, and has extended to multi-modal integration. In your recent “The Embodied VQA” paper, you introduced a task that incorporates active perception, language understanding, goal-driven navigation, common sense reasoning, and grounding of language into actions. This is a very attractive direction since it is more realistic and tightly connects to robotics. One challenge under this context is fast adaptation to new environments. Do you think models trained in the House3D environment (as in the paper) would quickly adapt to other scenarios, like outdoor environment? Do we have to explicitly build meta-learning ability in the models in order to achieve fast adaptation?

Devi and Abhishek: In their current instantiation, they probably would not generalize to outdoor environments. These agents are tightly tied to the specific distribution of images and environments they’re trained in. So while some generalization to novel indoor environments is expected, they haven’t seen sufficient examples of outdoor environments during training. In indoor environments for instance, wall structures and depth give strong cues about navigable vs. unnavigable paths. In an outdoor environment, the semantics of the surfaces (as opposed to depth) are likely more relevant to whether an agent should navigate on a path or not (e.g., road vs. grass).

Even within the scope of indoor navigation, generalizing from House3D to more realistic environments is not a solved problem. And yes, meta-learning approaches would surely be useful for better generalization to new tasks and environments. We are also thinking about building modular agents where perception is decoupled from task-specific planning + navigation, so we just need to re-learn the perception module to map from visual input from the new distribution (say more photo realistic environments) to a feature space the planning module is familiar with.

ShannonAI: You have a series of papers looking at premises of questions in VQA tasks (Ray et al. EMNLP 2016, Mahendru et al. 2017) and you showed that forcing standard VQA models to reason about premises during training can lead to improvements on tasks requiring compositional reasoning. There seems to be a general trend in NLP to include auxiliary tasks to improve model performance on primary tasks. However, not every auxiliary task is guaranteed beneficial. Can you comment on how we could find good auxiliary tasks?

Devi and Viraj: In the context of Mahendru et al 2017, the authors’ goal was to equip VQA models with the ability to respond more intelligently to an irrelevant or previously unseen question, by reasoning about its underlying premises. We had some intuition that explicitly augmenting the dataset with such premises could aid the model in disentangling objects and attributes, which is essentially the building block of compositionality, and found that to be the case. More generally though, we have now seen successful examples of such transfer across tasks. For example, the decaNLP challenge that is organized around building multitask models for question answering, machine translation, goal-oriented dialog, etc. Or, the manner in which models trained for RGB reconstruction, semantic segmentation, and depth estimation, are combined to build an effective vision system for training Embodied Agents (Das et al 2018). And of course, the now popular approach of finetuning classification models pretrained on ImageNet to a wide range of tasks. All of these seem to suggest that such learned representations can transfer quite effectively even across reasonably diverse tasks. But agreed, identifying meaningful auxiliary tasks is a bit more of an art than a science.

ShannonAI: In recent years, interpretability of deep learning models has received a lot of attention. You also have several papers dedicated to interpreting visual question answering models, e.g., to understand which part of the input the models focus on while answering questions, or compare model attention with human attention (Das et al. EMNLP 2016, Goyal et al. ICML2016 Workshop on Visualization for Deep Learning, Best Student Paper). Do you think enhancing the interpretability of deep neural network could help us develop better deep learning models? If so, in what ways?

Devi and Abhishek: A snippet from our Grad-CAM paper (Selvaraju et al.,ICCV 2017) has a crisp answer to this question:

“Broadly speaking, transparency is useful at three different stages of Artificial Intelligence (AI) evolution. First, when AI is significantly weaker than humans and not yet reliably ‘deployable’ (e.g.visual question answering), the goal of transparency and explanations is to identify the failure modes, thereby helping researchers focus their efforts on the most fruitful research directions. Second, when AI is on par with humans and reliably ‘deployable’ (e.g., image classification on a set of categories trained on sufficient data), the goal is to establish appropriate trust and confidence in users. Third, when AI is significantly stronger than humans (e.g. chess or Go), the goal of explanations is in machine teaching – i.e., a machine teaching a human about how to make better decisions.”

So yes, interpretability can help us improve deep neural network models. Some initial evidence we have of this is the following: if VQA models are forced to base their answer on regions of the image that people found to be relevant to the question, the models become better grounded and generalize better to changing distributions of answer priors at test time(i.e., on the VQA-CP dataset).

Interpretability also often reveals biases the model shave learnt. Revealing these biases can allow the system designer to use better training data or take other actions necessary to rectify this bias. Section 6.3 in our Grad-CAM paper (Selvaraju et al., ICCV 2017) paper reports such an experiment. This demonstrates that interpretability can help detect and remove biases in datasets, which is important not just for generalization, but also for fair and ethical outcomes as more algorithmic decisions are made in society.

ShannonAI: In the past you have done a lot of influential work and have published many widely cited papers. Could you share with students just entering the field of NLP some advice on how to develop good taste for research problems?

Devi: I’ll re-iterate advice I’ve heard from Jitendra Malik. One can think of research problems as falling somewhere along two axes: importance and solvability. There are problems that are solvable but not important to solve. There are problems that are important but are nearly impossible to make progress on given where the field as a whole currently stands. Try to identify problems that are important, and where you can make adent. Of course, this is easier said than done and there may be other factors to consider beyond these two (for instance, I tend to be heavily driven by what I find interesting and am just curious about). But this might be a useful first approximation to go with.

?

現在,在「知乎」也能找到我們了

進入知乎首頁搜尋「PaperWeekly」

點選「關註」訂閱我們的專欄吧

關於PaperWeekly

PaperWeekly 是一個推薦、解讀、討論、報道人工智慧前沿論文成果的學術平臺。如果你研究或從事 AI 領域,歡迎在公眾號後臺點選「交流群」,小助手將把你帶入 PaperWeekly 的交流群裡。

▽ 點選 | 閱讀原文 | 獲取最新論文推薦

知識星球

知識星球